Creating Assignments that AI Can't Outsmart

Client.

Thesis

Tools.

Figma

Year.

2025

Role.

Product Designer

Full Project Report: End-to-End Journey

The case study below presents a concise overview of the TraceEd project. For a more detailed walkthrough of the full end-to-end process, including in-depth research, design decisions, prototyping, and usability validation, you can explore the accompanying video and the complete project report here: Link to Project ReportLink to Project Report

Background

The rapid adoption of generative AI tools has fundamentally changed how students approach academic work. As of 2024, 92 percent of college students report using AI tools to brainstorm, outline, or generate coursework. While these tools introduce new efficiencies, they also expose a growing vulnerability in traditional assessment formats. Essays, summaries, and take-home assignments are increasingly easy to shortcut with AI, challenging long-standing models of academic integrity.

This shift has placed higher education at an inflection point. As AI becomes embedded in everyday student workflows, universities are under pressure to maintain academic standards without alienating students or overburdening faculty.

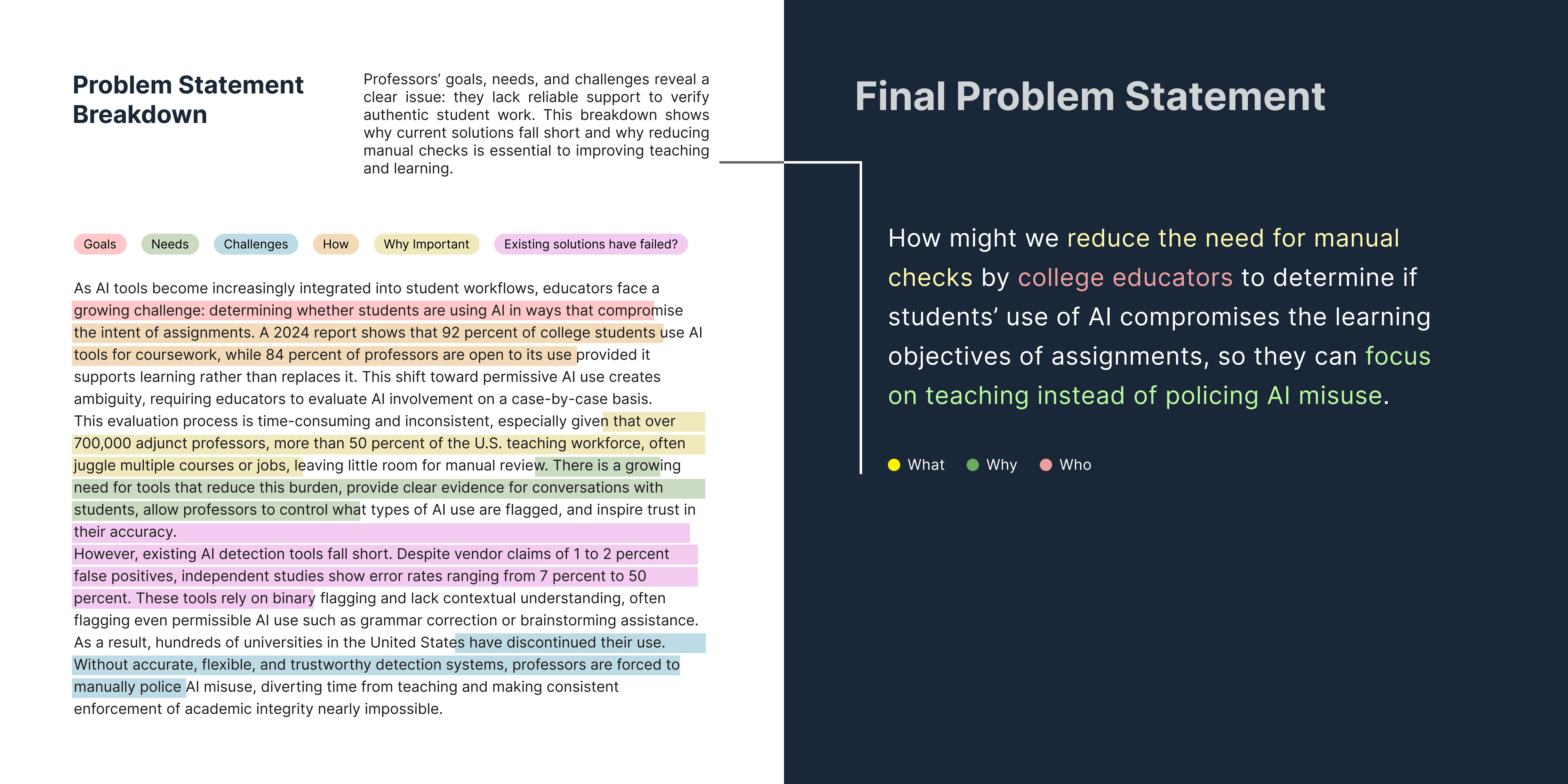

Problem

In response to rising AI misuse, universities have effectively transferred the responsibility of detection and enforcement to professors. Educators are now expected to act as investigators in addition to instructors.

This added role significantly increases cognitive and administrative load, especially when only 25 percent of faculty feel confident in identifying AI-generated content. To compensate, institutions have widely adopted AI detection tools, with 98 percent of Turnitin’s institutional customers enabling AI detection features. However, these tools have introduced new problems rather than resolving the old ones.

Across interviews, professors consistently reported false positives, false negatives, and a lack of actionable evidence. In fact, 11 out of 12 professors who had used detection tools described them as unreliable and ultimately stopped using them for assessment decisions. As a result, many educators fall back on manual review, despite lacking the time, confidence, or proof to act on suspected misuse.

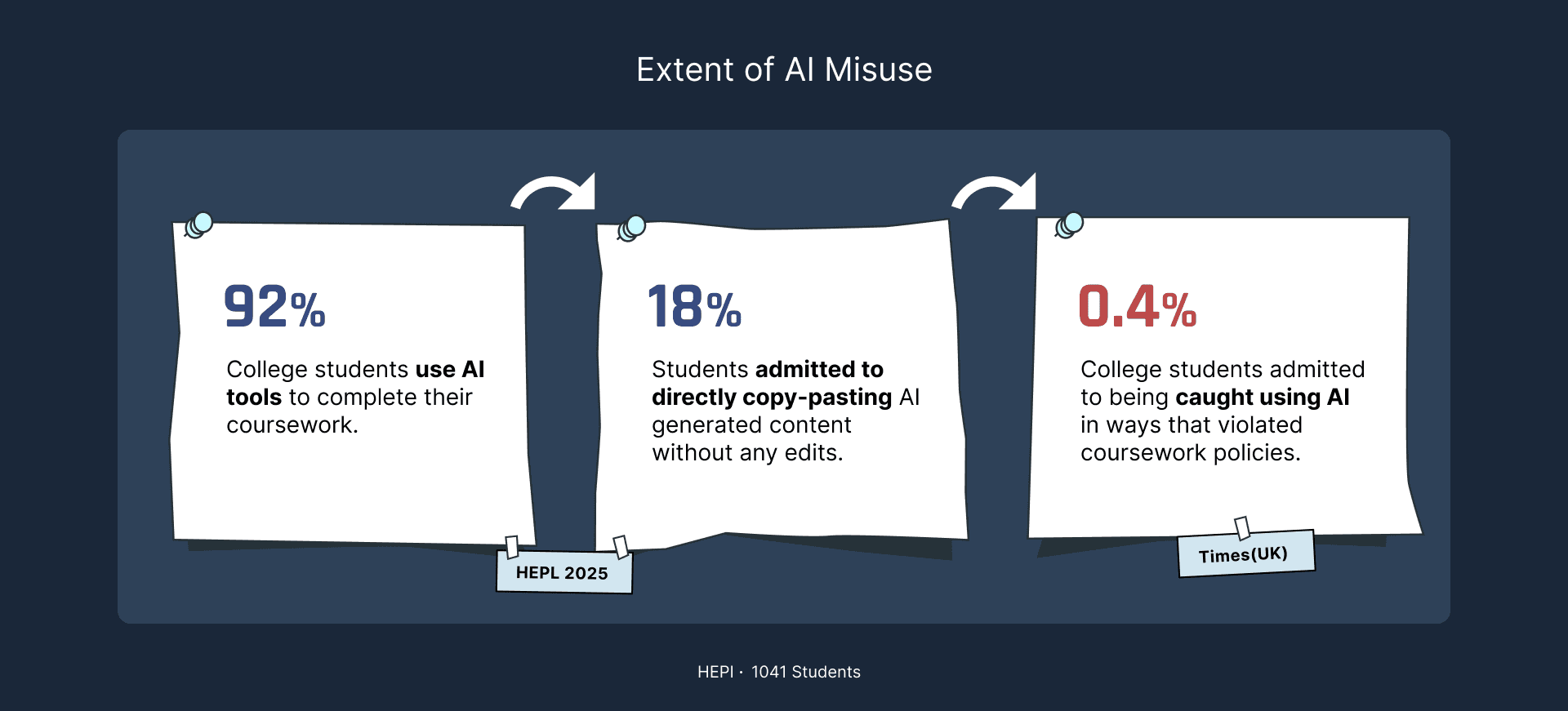

This breakdown has created a massive accountability gap. While 92 percent of students report using AI and 18 percent admit to copy-pasting AI content without edits, only 0.4 percent are ever caught. When misuse goes largely undetected, students lose opportunities to build critical thinking and writing skills, putting the long-term value of their education at risk.

Research Approach

To understand what existing systems fail to address and what educators actually need, I conducted a mixed-methods study that included:

In-depth interviews with 12 professors across five U.S. universities

A literature review covering AI detection tools, institutional policies, and misuse patterns

A student survey at Thomas Jefferson University with responses from 42 design students

The research focused on uncovering gaps between institutional tools, faculty realities, and learning outcomes.

Key Insights from Research

1. Detection accuracy is a persistent problem

Eighty-nine percent of professors who used detection tools reported frequent false positives and negatives. Several described situations where strong student writers were incorrectly flagged, making accusations too risky to pursue.

2. Acceptable AI use is indistinguishable from misuse

Current tools cannot differentiate between light AI assistance, such as grammar support, and full AI-generated submissions. This forces professors to manually review even legitimate work.

3. Manual review remains the default

Despite widespread access to detection tools, 93 percent of professors still rely on reading and evaluating work themselves. Over time, many abandoned detection tools altogether because they increased workload instead of reducing it.

4. Professors are not anti-AI

Every professor interviewed expressed openness to AI use, as long as it did not undermine learning outcomes. The concern was not AI itself, but whether students were still engaging meaningfully with course material.

Core Insight

Current detection tools are solving the wrong problem. They focus on the question, “Was AI used?” when educators are actually asking, “Did AI use interfere with learning?”

What professors need is not surveillance, but support in designing assignments where learning remains central, even in an AI-enabled environment.

Research Summary Video (10 min)

This video explores the core research that led to the final problem statement. It highlights key findings from student surveys and professor interviews, illustrating why traditional detection methods are failing and how the shift to Prevention by Design became the necessary path forward.

Solution Direction: Prevention by Design

Further research into AI detection technologies revealed a structural imbalance. Generative AI models receive exponentially more investment than detection tools, making detection increasingly reactive and short-lived. Tools can become outdated within months as models evolve.

This led to a strategic shift away from post-submission policing toward preventing misuse at the assignment design stage. By designing assignments that emphasize contextual reasoning, personal reflection, and higher-order thinking, educators can naturally reduce AI misuse. These are areas where AI struggles to produce authentic, meaningful work without student input.

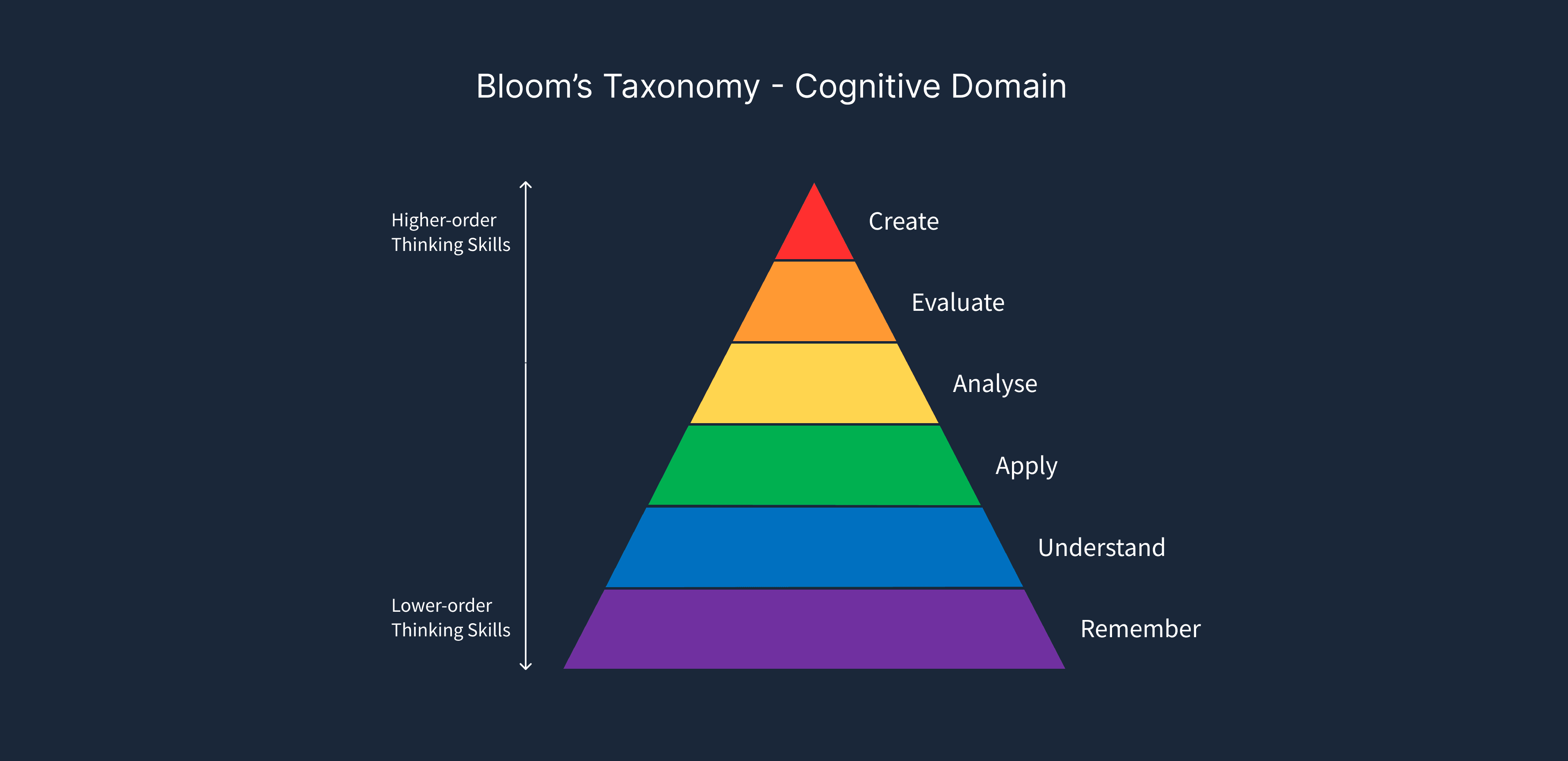

To make this approach measurable, assignments are evaluated using Bloom’s Taxonomy. Lower-order tasks like recall and summarization are highly vulnerable to AI, while higher-order tasks such as analysis, evaluation, and creation are significantly more resilient. The solution focuses on college-level education, where instructors have greater flexibility in assignment design compared to K-12 curricula.

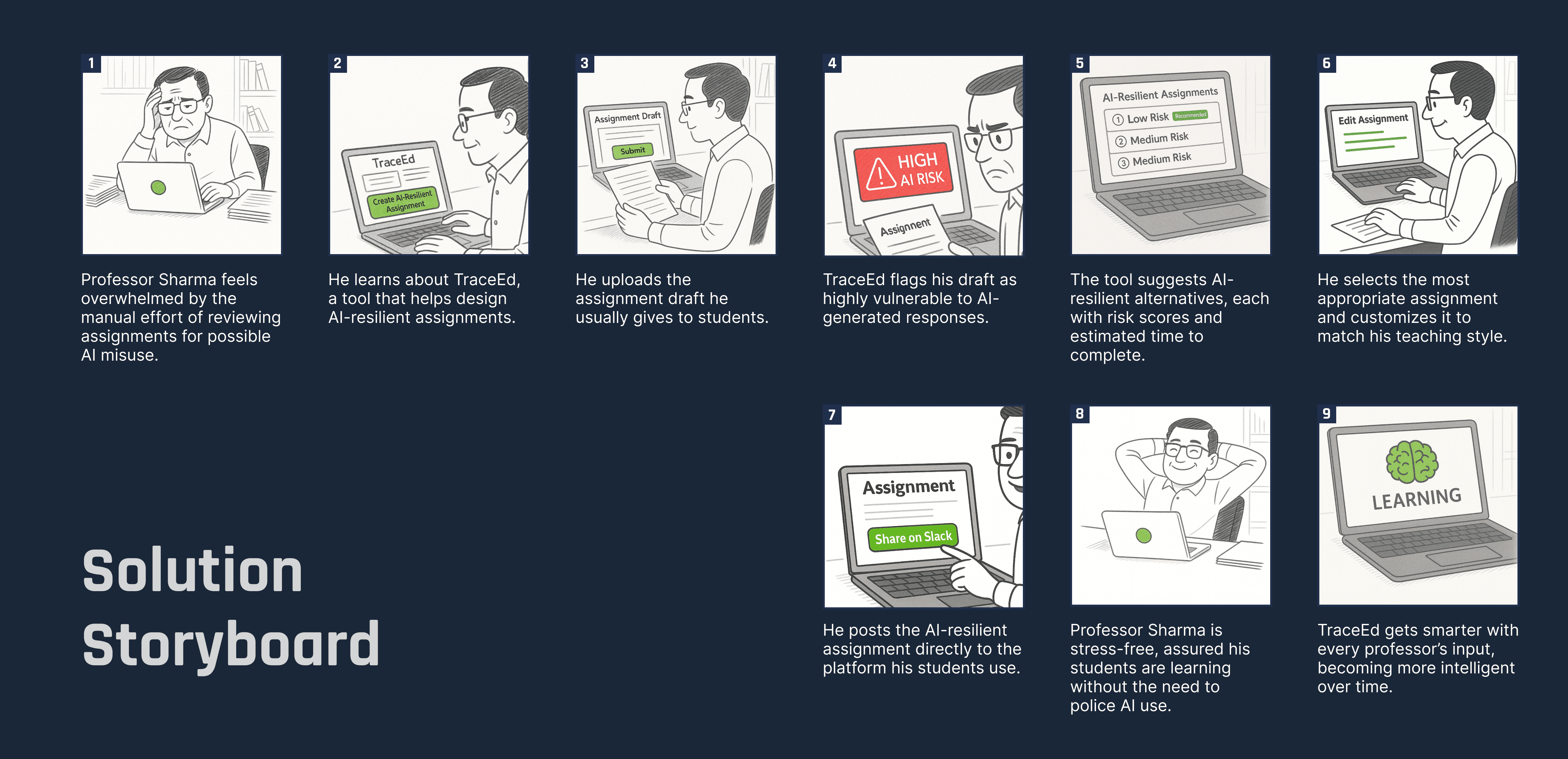

After extensive ideation and storyboarding, the solution converged on a simple but effective intervention: a tool that evaluates assignment drafts and shows professors where and how they can be strengthened against AI misuse.

The Product: TraceEd

The ideation led to the creation of TraceEd, a prevention-first platform that helps professors design AI-resilient assignments without increasing workload. Instead of acting as enforcers, educators are empowered to focus on teaching and learning outcomes.

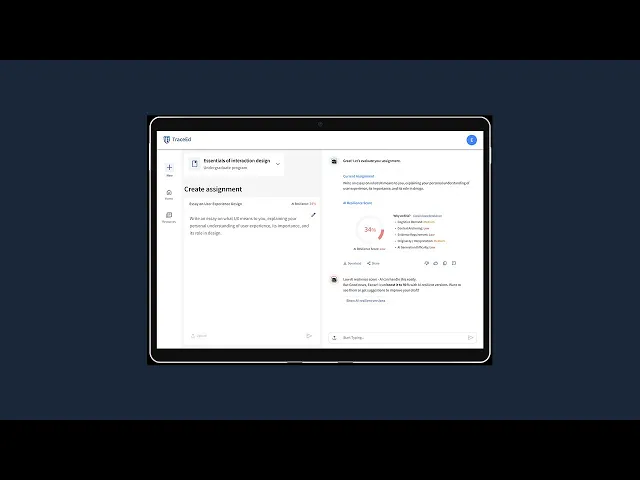

During onboarding, professors provide contextual details such as institution, course level, and subject area. This allows TraceEd to tailor recommendations appropriately, recognizing that expectations for graduate courses differ from those for undergraduate programs.

Professors can upload or paste existing assignment drafts into TraceEd. The platform evaluates the assignment’s vulnerability to AI use and generates an AI-resilience score, clearly highlighting weak points. TraceEd then suggests alternative prompts or modifications that preserve the original learning objectives while increasing resistance to AI shortcuts. Even if students use AI, the redesigned assignments ensure that learning goals are still met.

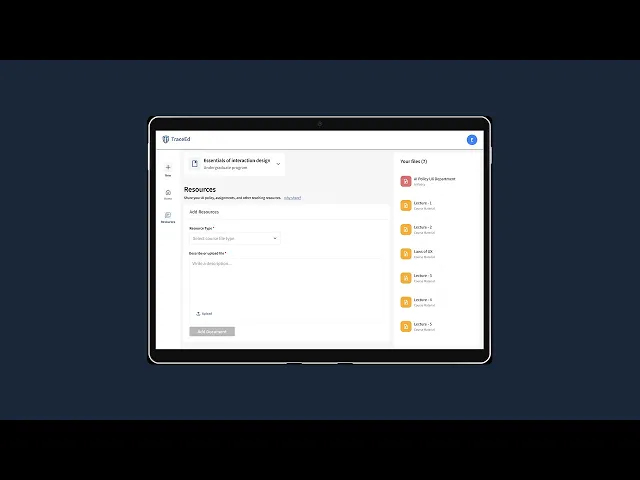

TraceEd also maintains a centralized resource repository. Professors can upload course materials, departmental guidelines, or institutional AI policies, which the system uses to generate more context-aware assignments. Updates to policies or materials automatically influence future recommendations.

Assignments can be saved as drafts, revisited, edited, published, or downloaded in multiple formats. This flexibility allows TraceEd to integrate seamlessly into existing teaching workflows rather than replacing them.